Bias and Varience in Machine Learning Algorithms

Machine learning algorithm prediction error can be classified into three types: irreducible error, bias error, and variable error.

Irreducible error

Irreducible error: As the name suggests, irreducible error is an error that is not reducible due to unknown variables. Unlike reducible errors, which we can reduce by using an appropriate learning algorithm.

Bias error

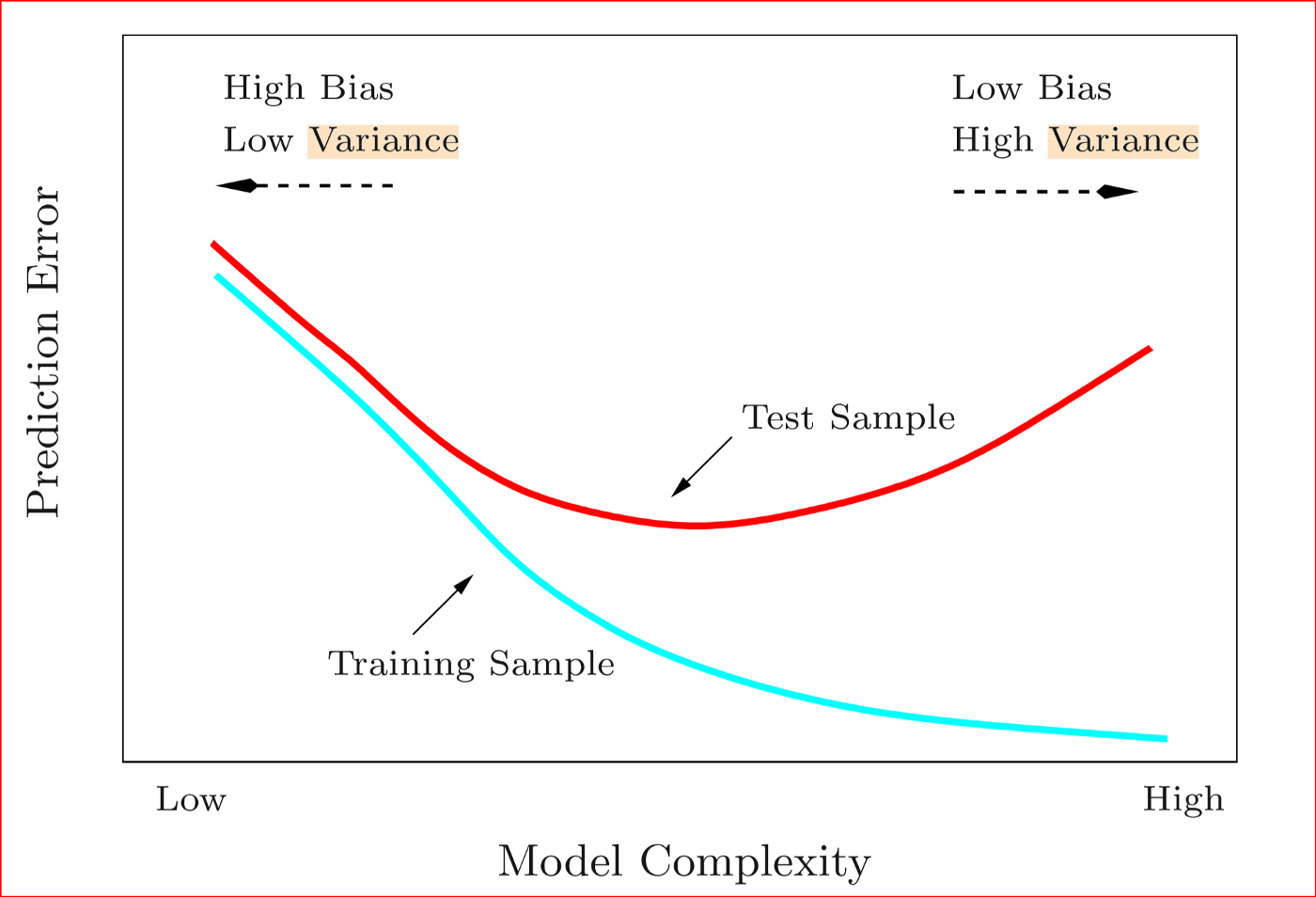

A model bias is a prior assumption made by a model to make the target function easier to learn. For example, a linear algorithm assumes that the target function is linear. Hence, if the target function is non-linear, then the model will have a bias error. Though they are easy to learn and understand, they are not flexible and have lower predictive performance compared to complex or flexible algorithms. Therefore, less-flexible algorithms (e.g., linear regression, linear discriminant analysis, and logistic regression) have higher bias than complex or flexible algorithms (e.g., neural networks, decision trees, k-Nearest Neighbors, and support vector machines).

Variance error

Assume we train the same model with two different training datasets: N and M. Variance refers to the changes in the model when different training data sets are used. Certainly, the model will have a variance error when the training dataset is different. But, the error should not be too high between different training datasets.Therefore, low-variance algorithms have small changes when a different training dataset is used, and high-variance algorithms have large changes when a different training dataset is used. Flexible or complex algorithms have a high variance (e.g. SVM, Decision Trees, Neural Networks, k-Nearest Neighbors). However, non-flexible algorithms have a low variance (e.g., linear regression, linear discriminant analysis, and logistic regression).

Finally, the goal of any supervised learning algorithm is to achieve low-bias and low-variance errors. Achieving these goals is the key to the success of any supervised learning algorithm. But, how can we achieve these goals? That is where the idea of Bias-Variance Tradeoff comes into play.

Summary:

- Non-complex machine learning algorithms have high bias and low variance.

- Nn-complex/flexible machine learning algorithms have a low bias but a high variance.